The web has revolutionized most aspects of our daily life in the private sector, the speed and ease that today’s big platforms provide for exchanging information to send messages and process purchases are proof of how these systems have made great strides in the last 20 years, unfortunately the same cannot be said for the public sector.

In Europe, and within each country, there are patchy virtuous examples of digital processes adoption to simplify the public administration with the same efficiency and “user experience” that is granted by the big tech companies, in Italy things are rather complicated…we have tremendously slow organizations that cannot react with the same speed, reason why most Public Administration (PA) websites seem to be stuck in the 90′.

Hack.Developers Italia, reboot the PA system

If you’re reading this page you’re most likely a developer, how many times did you find yourself thinking :

“I could do this stuff better in two days!”

I did many times, and I happen to have treasurable experience gained exactly at one of those big IT players like Expedia, so I told to myself, why not actually do something?

This is the same thought that moved Diego Piacentini, ex VP of Amazon that came back to Italy specifically to lead pro bono the new PA Digital Team, to reboot the “digital system” of the country, and with this spirit was held the event Hack.Developers Italia 2017 that took place simultaneously in tens of locations, both in Italy and abroad, in order to call as many developers as possible to contribute on software projects with public interest.

Among the various available projects hosted on GitHub to which its possible to give contribute I’ve pinpointed the one for the smart button for the SPID auth system.

Just like the now omnipresent social login buttons that we’re now accustomed to see everywhere, this project has as final objective a single JS file that can be imported externally, self sufficient in terms of HTML, CSS and JavaScript, which manages a centralized and coherent UX to authenticate with PA sites using a single set of credentials.

Starting from the initial draft I created my new branch with the intent of a big refactoring, that didn’t change almost anything in terms of original functionalities for the user, except the requests for having the widget not to rely on existent HTML to self-render in page and to allow configurable POST/GET submission of the accessible form buttons.

My main objective was to donate the notions related to the life cycle management of a Front End project that is set to live for a long time and be maintained by an unset number of people, like I learnt at Expedia working on long term projects in collaboration with many developers like me.

Code style and IDE agnostic

Within a big company it’s not unusual to have many developers using different kind of hardware and software to work, ranging from Windows, Mac and Linux and any type of combo considering virtual machines for various purposes, both for direct work or pipelined processes. For the development work even a notepad could do still the much more comfortable IDEs are big in number and anyone has its preferences, and they come with different sets of project metadata.

So in order to ensure that a project can be correctly maintained on a vast variety of platforms and IDEs it’s good to think about a basis that doesn’t rely on any and can be easily adapted to all.

NodeJS is extremely useful for this, with a package.json it allows to define which modules and commands make up the project and which tools we can use to check that everything is going well, it allows to create a localhost server which works the same way in all environments, defining also commands for operations like minifization of resources, ensuring a streamlined experience for development and testing for all devs.

Line Endings and version control

Many had the unlucky experience of editing a bit of a file in git only to see that the whole file gets deemed as changed instead of the actual lines, this happens if the file was previously modified on a different operative system, and now our OS/IDE is changing the line ending properties.

Anyway it’s possible to give instructions to git so that it knows how to manage this and other codification aspects at commit time for anyone working on the project, with the .gitattributes file.

Linting: Tabs, JS and CSS

The same objective can be reached in several different ways when coding, still having to analyze and discuss always different code for the same task can require big costs in term of time spent in unresolvable debates around what’s the “best” way to do something when in fact there is not a “right” way, for this reason conventions are a crucial thing.

By adopting a written convention that is automatically enforced by the IDE and checked before committing it’s possible to greatly reduce the time spent on pull requests, plus saving human and work relationships.

For spaces vs tabs we have .editorconfig which is automatically applied while writing in the IDE (may require a plugin), for JavaScript there is .eslintrc while for CSS we have .stylelintrc, both of which act as suggestions within the IDE (always via plugin), and also as formal check before committing via git hook with grunt.

Avoiding the FOUC effect

As everyone knows, HTML and CSS are not programming languages because they lack Turing completeness characteristics, anyway there are user experience and perceived performances objectives that can be reached only with a smart combination of the two that can be only obtained from a deep knowledge of how the browser uses them.

After the JavaScript module’s initialization an Ajax call is fired to fetch labels and providers lists, after obtaining them the buttons rendering and styles loading will start, but in that moment styles will still not be available to be applied so the buttons will first appear in their raw form and progressively enhance as the CSS finishes loading applying colors, widths/heights and so on, this behavior is know as FOUC meaning Flash of unstyled content:

A bit like if Cinderella was to show up to the ball covered in rags to then magically dressing up on the place in front of everyone…

By rendering the buttons with the attribute hidden instead a display:none style will be applied by the browser local stylesheet, defining then the visible display type for the button at the end of the CSS file meaning after all the rules have been downloaded, we ensure that the buttons will appear completely styled in one shot.

CSS legacy compliant with Autoprefixer

The usage of CSS pre-processors like Sass enables a wide range of useful possibilities that are not available in stand alone plain CSS, but this is actually a double edged sword, because the additional level of abstraction makes easy to lose track of the actual CSS code that is compiled and given to the browsers and the users.

The usage of CSS pre-processors like Sass enables a wide range of useful possibilities that are not available in stand alone plain CSS, but this is actually a double edged sword, because the additional level of abstraction makes easy to lose track of the actual CSS code that is compiled and given to the browsers and the users.

The two most common mistakes I see recurring in many different contexts are related to the abuse of mixin, the first one is when they’re used improperly in place of plain CSS classes, which results in excessive file size, and the second one is to create sets of vendor prefix properties used inconsistently across classes and almost never revised in the evidence of mutated browser support requirements that should be overseen with analytics team.

This second aspect can be tamed more reliably after the Sass compilation is done with postcss and autoprefixer, which reads a moving support baseline file defined in .browserslistrc that drives which rules needs to be legacy-adapted and enriches only those.

Separation of concerns

Keeping in mind that the primary goal of the project is to have a file that is very lightweight and self sufficient, this doesn’t mean necessarily that the source code to work and the final product should match, being clear that these two requirements kind of conflict with each other.

The final product that we want to obtain is a single JS file that once imported will make available a set of methods to initialize the button, which after data is successfully retrived will also download the CSS file and the HTML is injected in the page, then watching it to handle the dynamic interactions to display the correct panels, all of this with two files (plus external assets such as backgrounds and logos).

The lighter the files the better meaning no library and no framework, no matter how much minified, total minimalism to save up as much bandwidth as possible.

Using this track I made different context files to handle these separate aspects, within the same module, leveraging on the prototypal inheritance by extending my core JavaScrip object/module so to keep everything under one namespace:

- HTML templates: src/js/agid-spid-enter-tpl.js

- localization: src/js/agid-spid-enter-i18n.js

- JS logic: src/js/agid-spid-enter.js

These three elements combined together during the minifization are then guided by a configuration file, conveniently differentiated for development and production, and as a file result we obtain a file named with its semantic version both for the CSS and the JS, plus a “latest” each, both for dev/ and dist/.

If it cannot be tested it will break

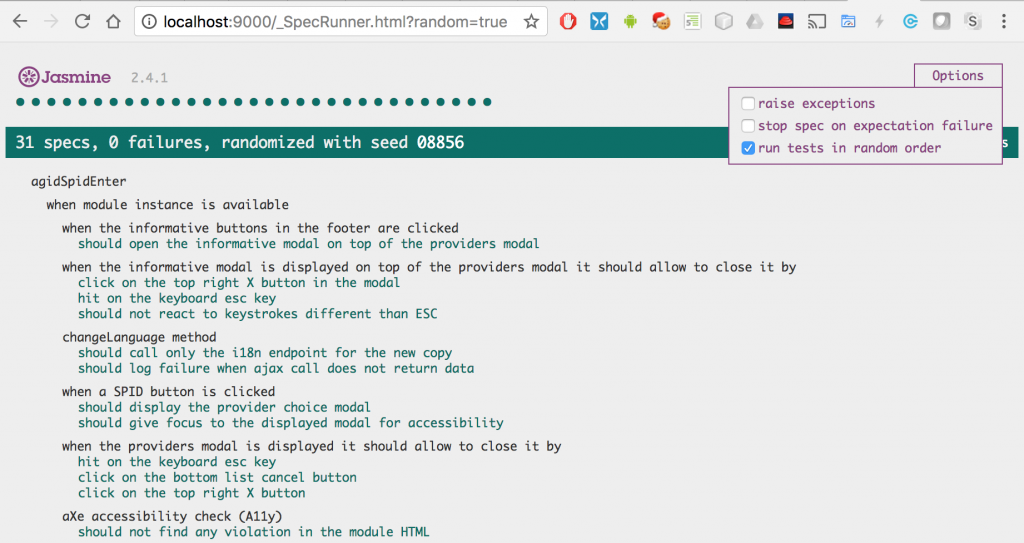

Unit tests: Jasmine

If even one small part of the code is “too difficult to test” it means that it was thought out badly, it works only with the precise knowledge of mechanisms that will result obscure to the next developer that will work on it with an high probability of being unwillingly compromised, which is bad.

The unit tests, albeit present, should also be truly “units” in the sense that the order in which they are execute should be irrelevant to the outcome of each, this will also enable to add/remove/edit any test without affecting the others.

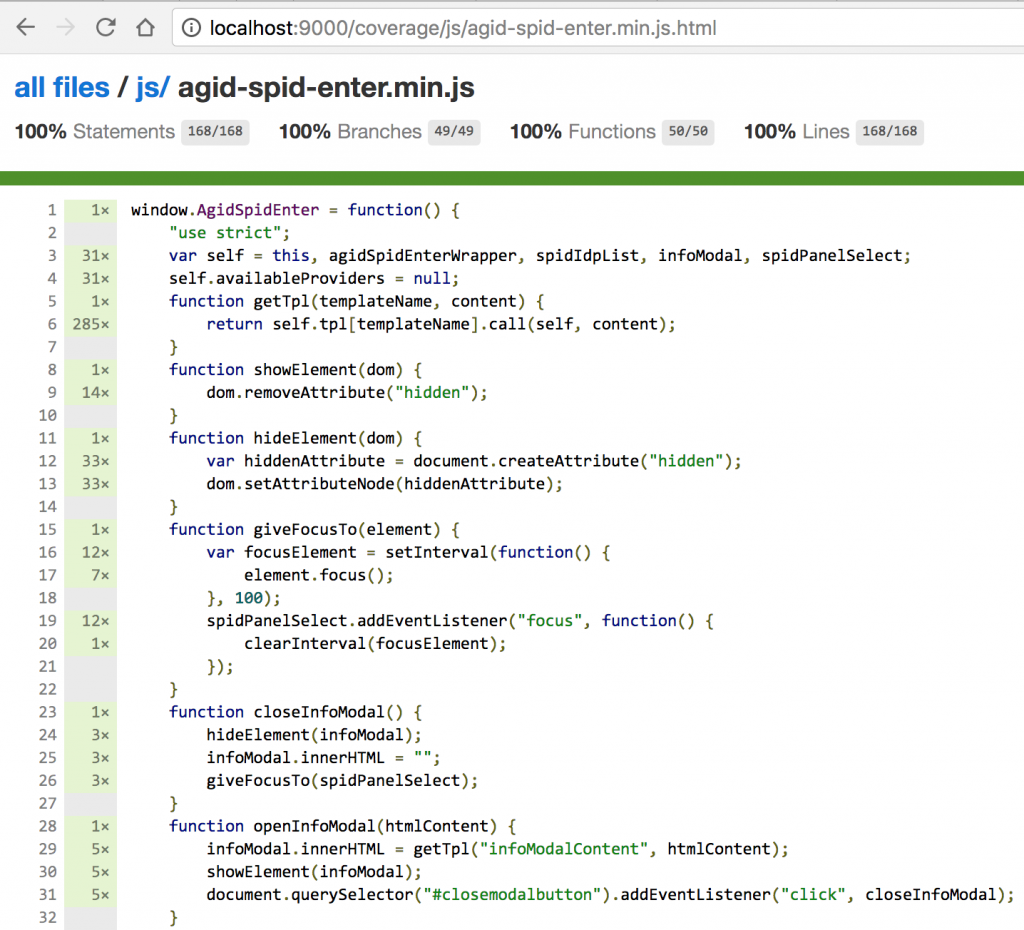

Code coverage: Istanbul

Writing tests is surely a good step, even better would be to start from the tests first and the code later in TDD style, anyway it’s not possible to truly know if every case has been properly covered without a static analysis that runs when the tests are executed, this is where Istanbul comes to help so that after the Jasmine tests have run it will confirm that everything was correctly covered.

Accessibility test: Axe

The topic of accessibility in the web, also know in the sector with the acronym a11y, is one of the recurrent resolutions that is addressed as a future adoption thought to be carried out with mindfulness by the developers while drafting templates, but we actually finally have powerful automated tools that can oversee and guide us for the basic HTML validity of the product.

Axe is a JavaScript library that can be used in combination with Jasmine to validate an HTML page, it can be either a full remote page or running in a

localhost or also fragments generated during the Jasmine tests execution, which allows to wait for dynamic event on the page and test the partials that are not available at landing time but that will be used in specific occasions.

Axe is a JavaScript library that can be used in combination with Jasmine to validate an HTML page, it can be either a full remote page or running in a

localhost or also fragments generated during the Jasmine tests execution, which allows to wait for dynamic event on the page and test the partials that are not available at landing time but that will be used in specific occasions.

This way, if anything is missing from our HTML to be accessible, or a regression is inadvertently introduced, there will be a test that will fail, point to the problem and prevent us from committing inaccessible code.

A system like this is surely more reliable that the fuzzy resolution to remember about accessibility, which is like what everyone by swearing to themselves to start gym in January… 🙄

Awesome, but where do I get started exactly?

From terminal, the steps are:

clone the project from GitHub

|

1 |

git clone https://github.com/srescio/spid-smart-button.git |

enter in the project directory and install the dependencies

|

1 |

cd spid-smart-button && npm install |

if everything was installed correctly the build of the assets will start automatically followed by the localhost server startup and the grunt watcher for sources changes to start working, otherwise if something went wrong or anyway to start later the three steps mentioned above, run the command:

|

1 |

npm start |

now you can visit the page http://localhost:9000/ from which you can reach:

- demo/develpment: http://localhost:9000/index.html

- Jasmine unit test: http://localhost:9000/_SpecRunner.html

- Istanbul code coverage: http://localhost:9000/coverage/dev/agid-spid-enter.min.js.html

If you’re about to develop a new feature, after creating a new branch bump the semantic version in the package.json#L3 and it will be propagated to the names of the newly minified files and in the configurations.

Edit any file present under tge src/js and src/scss directories and you’re ready to committ 🙂 .